Eye in the sky

Researchers at Osaka University generate high-quality datasets of textured 3D building models by digitally removing clouds from aerial images using machine learning, which may aid in urban planning or recovery after natural disasters

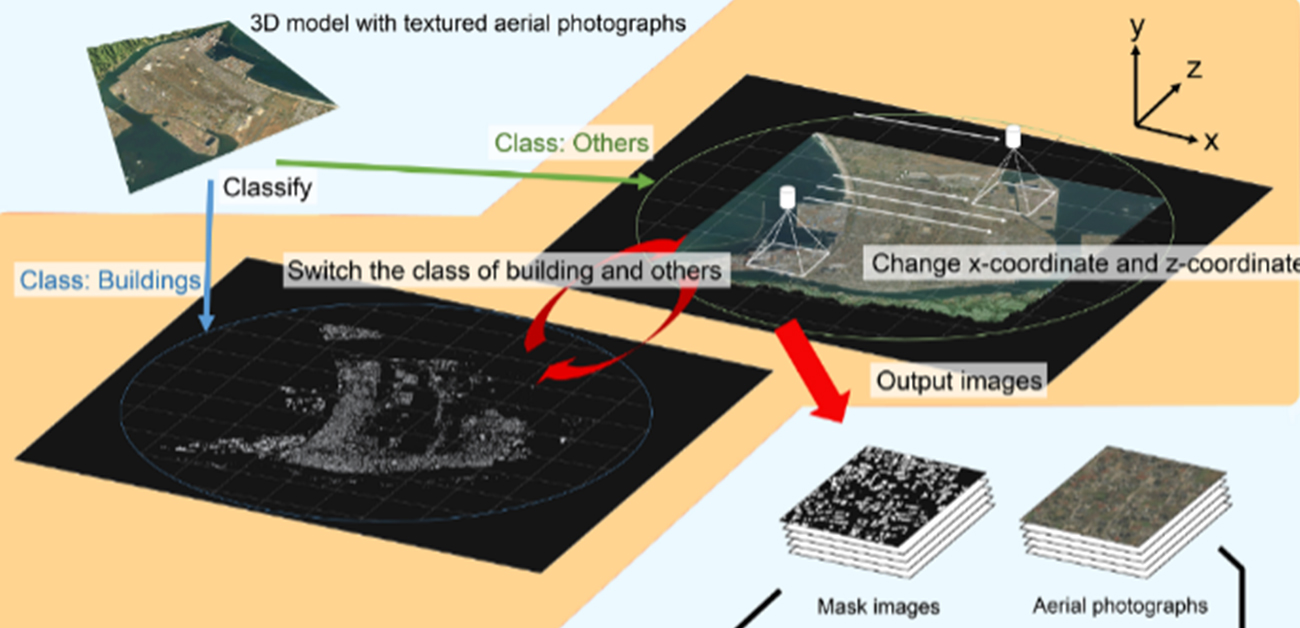

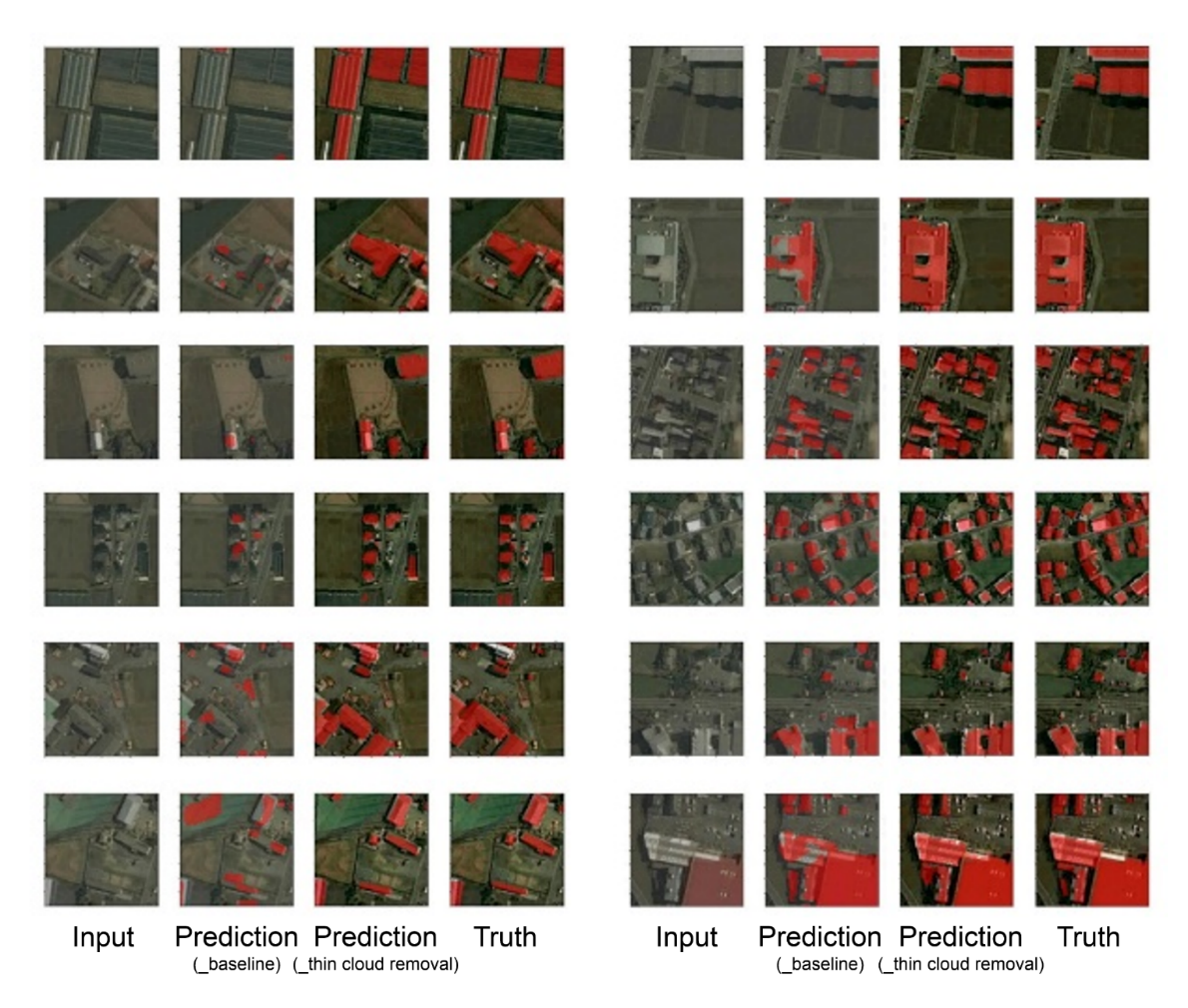

Scientists from the Division of Sustainable Energy and Environmental Engineering at Osaka University used an established machine learning technique called generative adversarial networks to digitally remove clouds from aerial images. By using the resulting data as textures for 3D models, more accurate datasets of building image masks can be automatically generated. When setting two artificial intelligence networks against each other, the team was able to improve the data quality without the need for previously labeled images. This work may help automate computer vision jobs critical to civil engineering.

Machine learning is a powerful method for accomplishing artificial intelligence tasks, such as filling in missing information. One popular application is repairing images that are obscured, for example, when aerial images of buildings are blocked by clouds. While this can be done by hand, it is very time consuming, and even the machine learning algorithms that are currently available require many training images in order to work. Thus, improving the representation of buildings in virtual 3D models using aerial photographs requires additional steps.

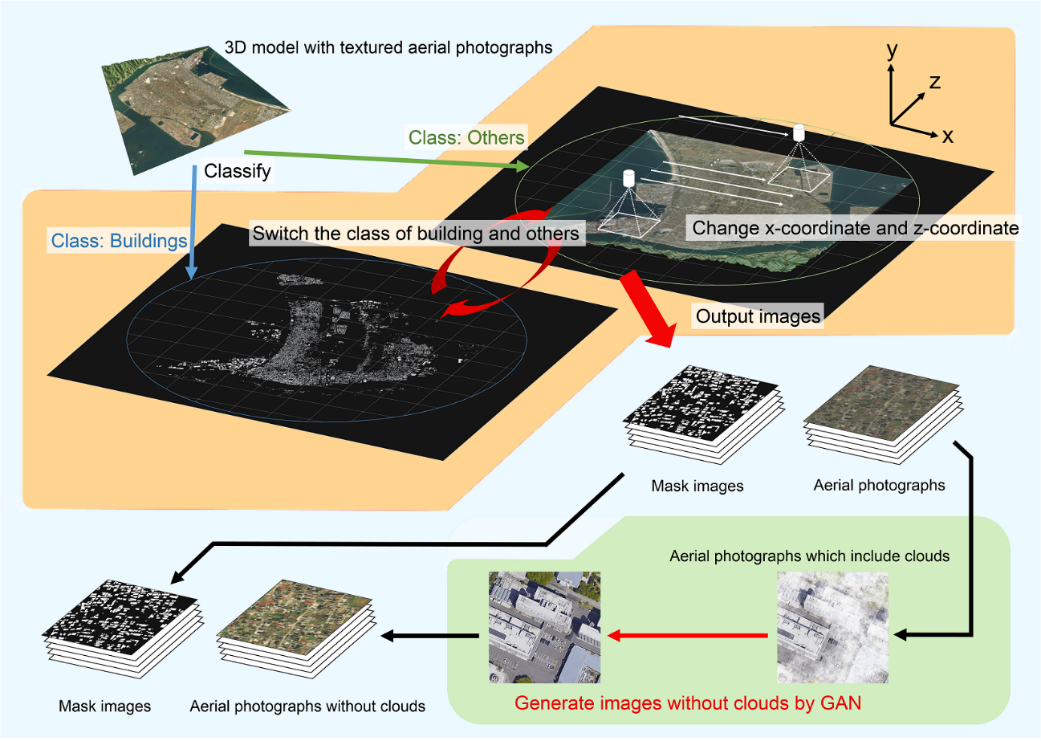

Now, researchers at Osaka University have improved the accuracy of automatically generated datasets by applying the existing machine learning method called generative adversarial networks (GANs). The idea of GANs is to pit two different algorithms against each other. One is the “generative network,” that proposes reconstructed images without clouds. Competing against it is the “discriminative network,” that uses a convolutional neural network to attempt to tell the difference between the digitally repaired pictures and actual images without clouds. Over time, both networks get increasingly better at their respective jobs, leading to highly realistic images with the clouds digitally erased. “By training the generative network to ‘fool’ the discriminative network into thinking an image is real, we obtain reconstructed images that are more self-consistent,” first author Kazunosuke Ikeno explains.

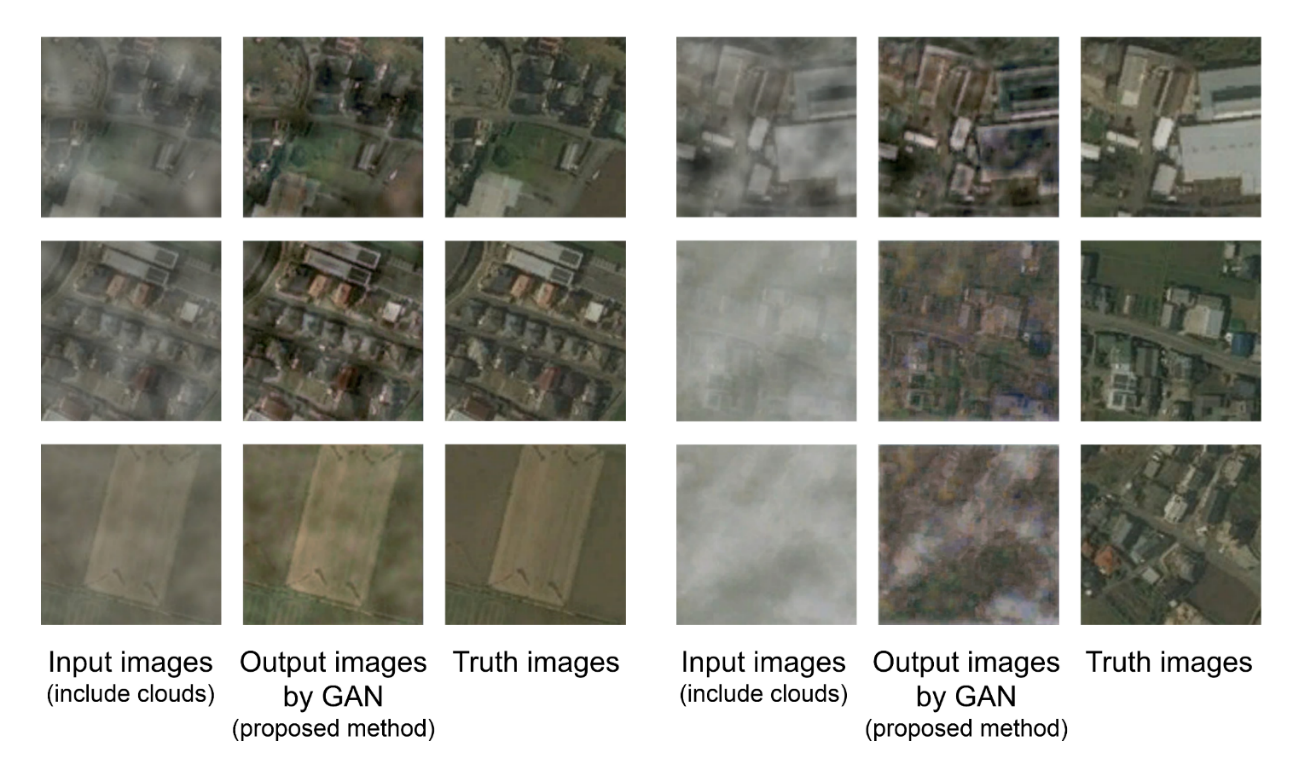

The team used 3D virtual models with photographs from an open-source dataset as input. This allowed for the automatic generation of digital “masks” that overlaid reconstructed buildings over the clouds. “This method makes it possible to detect buildings in areas without labeled training data,” senior author Tomohiro Fukuda says. The trained model could detect buildings with an “intersection over union” value of 0.651, which measures how accurately the reconstructed area corresponds to the actual area. This method can be extended to improving the quality of other datasets in which some areas are obscured, such as medical images.

Fig.1 Conceptual diagram of the proposed method

(credit: © 2021 Kazunosuke IKENO et al., Advanced Engineering Informatics)

Fig.2 Comparison of cloudless images and cloud removal images by using GAN.

(credit: © 2021 Kazunosuke IKENO et al., Advanced Engineering Informatics)

Fig.3 Building detection results for aerial photographs of Sakaiminato City by each model.

(credit: © 2021 Kazunosuke IKENO et al., Advanced Engineering Informatics)

The article, “An enhanced 3D model and generative adversarial network for automated generation of horizontal building mask images and cloudless aerial photographs,” was published in Advanced Engineering Informatics at DOI: https://doi.org/10.1016/j.aei.2021.101380.

Related Links

Fukuda Tomohiro (Researchers Database)