A moth’s virtual reality

Researchers build a multi-modal VR system to study the behavior of male silkmoths searching for females using visual, wind, and odor signals, helping to shed light on how an insect deals with conflicting information

A team led by the Graduate School of Engineering Science at Osaka University and Department of Systems and Control Engineering at Tokyo Institute of Technology has shed light on the search behavior of moths using a specially constructed virtual reality system that can independently apply visual, wind, and odor stimulation. This work may help in the development of automated robots that can find chemical leaks or that operate in search-and-rescue environments.

The ability of most organisms to survive and reproduce depends on their navigation skill within an environment based on sensory information. Often, data from several modalities, such as vision and olfaction, must be successfully integrated in order to determine the correct behavior. This is particularly true for search tasks, such as looking for food or potential mates when an odor or pheromone signal is present. However, the mechanism by which insects can find the source of an odor, as well as the impact of conflicting data sources, are largely unknown.

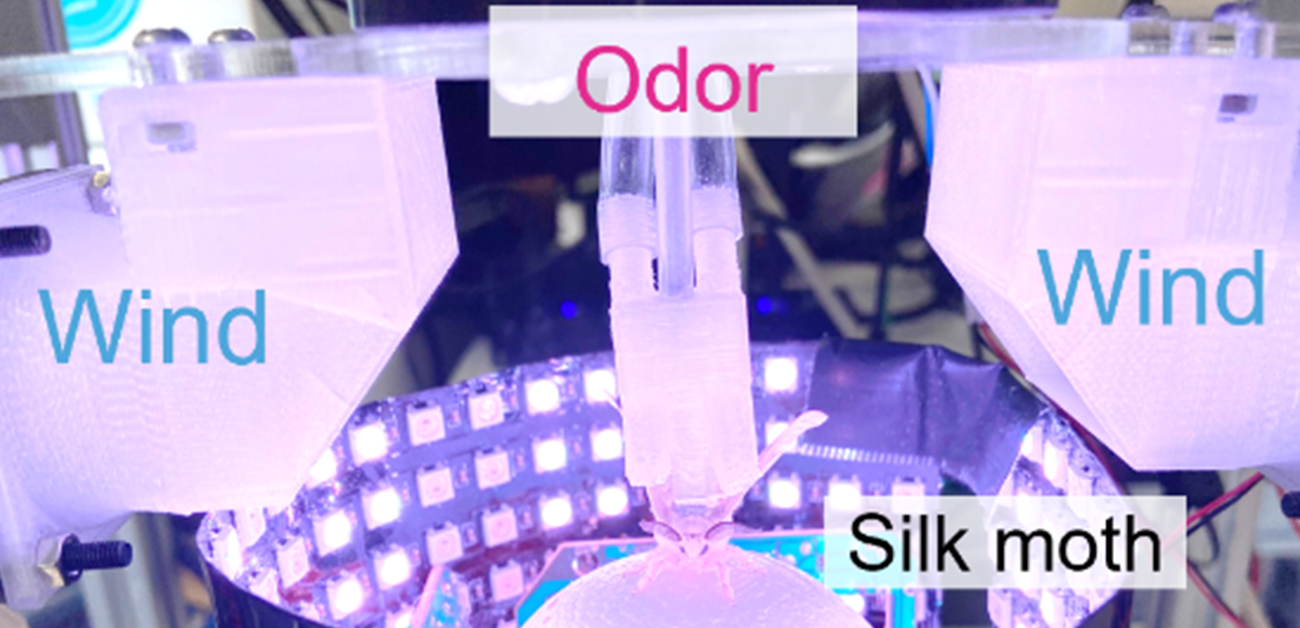

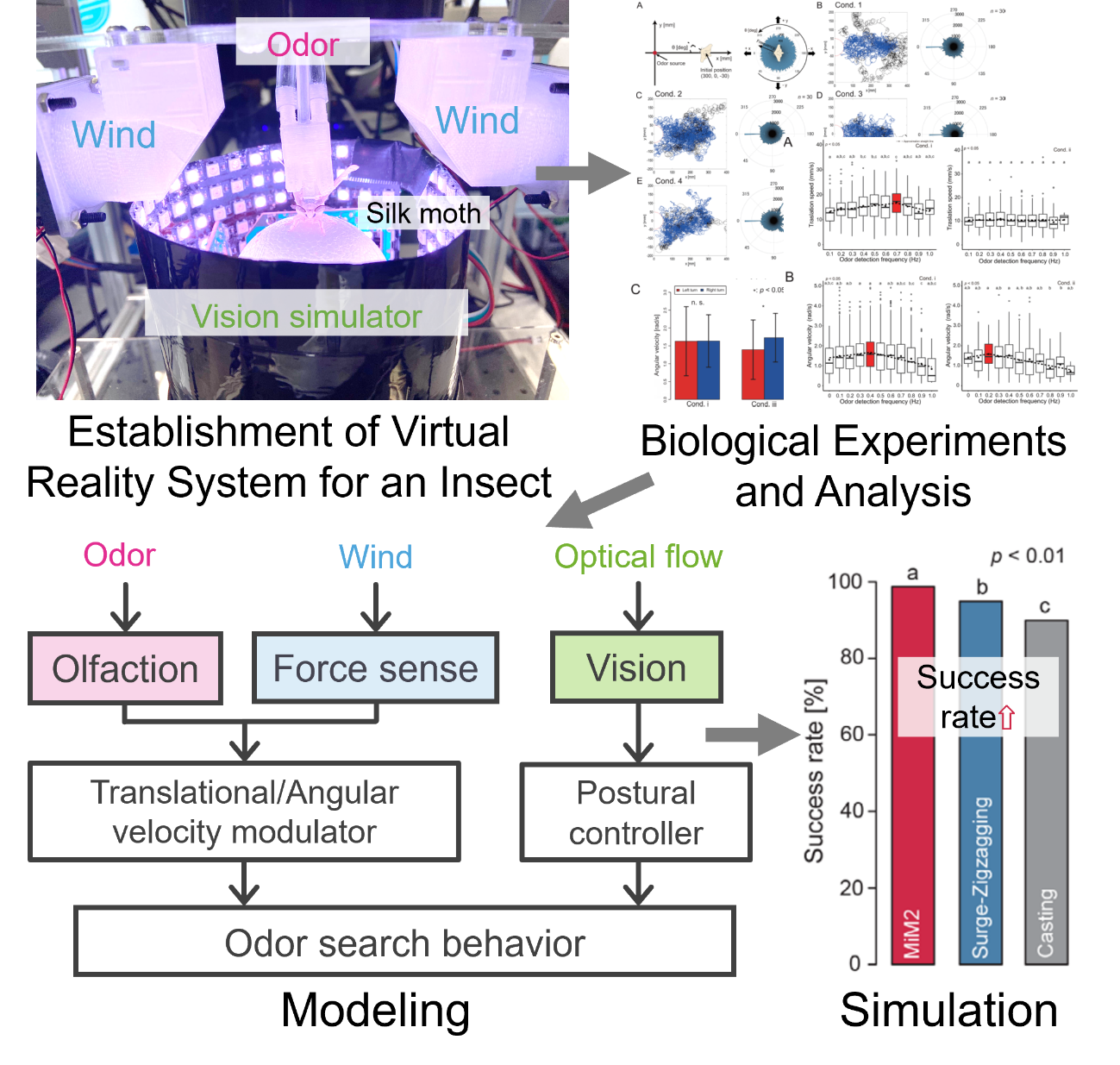

To study this issue, the team built a virtual reality system for male silkmoths that could present odor, wind, and visual stimuli. Air flow was controlled for the front, back, left, and right sides of the moth. At the same time, a pheromone could be applied to the two antennae independently using odor discharge ports. A 360-degree array of LED lights, which rotated automatically based on the motion of the moth, provided visual feedback. “The VR system we developed for this study can monitor female search behavior in a complex environment,” study first author Mayu Yamada explains.

The behavioral experiments using the VR system revealed that the silkmoth had the highest chance of navigating successfully when the odor, vision, and wind information were accurately provided. However, the search success rate was reduced if the wind direction information was in conflict with the odor stimulus. In this case, the silk moth was seen to move more carefully. This reflected a modulation of behavior based on the degree of complexity of the environment.

The team recorded a migration probability map to visualize the effects of differences in environmental conditions on the behavioral trajectory. They developed a new mathematical model that not only succeeded in reproducing the silk moth search behavior, but also improved the search success rate relative to the conventional odor-source search algorithm.

“We think that this multi-sensory integration mechanism will help with the motion algorithm of automated rescue robots,” senior author Shunsuke Shigaki says. This would allow search robots to find chemical leaks more efficiently.

sFig.1 Research procedure for behavioral experiments that use insect VR for model validation (credit: © 2021 Yamada et al., eLife)

The article, “Multisensory-motor integration in olfactory navigation of silkmoth, Bombyx mori, using virtual reality system” was published in eLife at DOI: https://doi.org/10.7554/eLife.72001.