New intelligence for understanding the universe: quantum and AI discover anomalous energy radiation

World's first achievement by the fusion of X-ray space observation data and quantum machine learning

- By verifying the simulation of quantum machine learning model, which was built by combining quantum computers and machine learning, more potential anomalous phenomena from actual fluctuation data in the brightness of the universe were successfully detected than when using classical computers.

- In the future, there will be a need for a method to automatically detect even the slightest anomalous fluctuations from the vast amount of video data of the universe captured by high-performance astronomical telescopes. In this research, it has been confirmed that incorporating machine learning into quantum computer technology makes it possible to efficiently detect anomalous phenomena.

- The research results not only have the potential to shed light on the evolution of the universe and physical phenomena through the anomalous phenomena detected in space, but also open the door to the application of quantum machine learning to the field of astronomy.

Outlines

A research group consisting of Assistant Professor Taiki Kawamuro of the Graduate School of Science at the University of Osaka, Associate Professor Shinya Yamada and Yusuke Sakai (doctor course) of Rikkyo University, Chief Scientist Shigehiro Nagataki and Senior Research Scientist Shunji Matsuura of RIKEN, and Assistant Professor Satoshi Yamada of Tohoku University, has succeeded in capturing 113 anomalous energy (X-ray) radiation phenomena by building and applying a quantum machine learning model that combines quantum computers and machine learning from the large-scale cosmic X-ray fluctuation data collected over the past 24 years or so by the XMM-Newton X-ray satellite operated by the European Space Agency (ESA).

In the near future, a huge amount of video data will be acquired to capture changes in the universe even more than we can now. Therefore, cutting-edge machine learning models are being actively developed to find variations that exceed human expectations and to elucidate the mysteries of the universe, such as its diversity and hidden physical phenomena.

In such a context, the research group conducted simulation-based experiments to explore the usability of quantum machine learning combining with machine learning and quantum computers. They embedded quantum circuits into a neural network called LSTM (Long Short-term Memory), which is used in machine learning, and prepared it so that it could be calculated on a quantum computer and used to detect abnormal fluctuations in brightness. As a result, they could identify more candidates than would be possible with a classical computer.

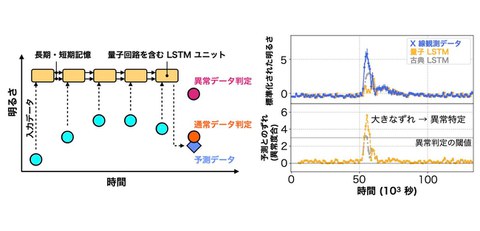

Fig. 1

Left: Overview of the quantum LSTM used in the study and anomalous brightness detection. The input data is continuously sent to an LSTM unit containing a quantum circuit, and brightness is predicted (diamond). If the deviation is smaller than the predicted data, it is judged to be normal data (orange dots), and if the deviation is larger, it is judged to be an anomalous phenomenon (burgundy dot).

Right: An example of the results of applying quantum and classical LSTM to actual changes in X-ray brightness. The upper panel shows the actual observed data, the quantum LSTM predictions, and the classical LSTM predictions. The bottom panel shows the discrepancy between the observed data and the predictions. Quantum has a larger deviation or anomalous signal.

Research Background

Sudden fluctuations such as star explosions and activity around black holes occur every day in the universe, and these are thought to be important phenomena in exploring the formation of the structure of the universe and physics under extreme conditions. Occasionally, researchers discover unexpected fluctuations, and discovering such anomalies is cutting-edge research aimed at unraveling the mysteries of the universe, such as its diversity and hidden physical phenomena. In the future, cutting-edge astronomical telescopes such as the Large Synoptic Survey Telescope (LSST) and NewAthena are expected to acquire even more data recording the time-varying dynamics of the universe at various wavelengths of light, including X-rays. Therefore, needs for a method that can automatically detect even slight anomalous fluctuations from the acquired data, and machine learning has been considered so far. In response to this, the research was the first in the world to verify the usability of quantum machine learning, which incorporates the feature of quantum computers into machine learning, on a simulation basis.

Research Contents

The research group developed a machine learning method called Quantum Long Short-term Memory (QLSTM) that incorporates quantum computing mechanisms (Fig. 1, left). By continuously inputting time and data on brightness into a unit with embedded quantum circuits, it will ultimately be possible to predict brightness. Anomalous fluctuations can then be detected based on deviations from normal brightness predictions (Fig. 1, right). The researchers did not use an actual quantum computer, but rather simulated quantum circuits to verify implementation and usability. Finally, the trained quantum machine learning model was applied to approximately 40,000 light curve data sets acquired by the European Space Agency's (ESA) X-ray satellite XMM-Newton, and was able to detect 113 anomalous fluctuations, successfully capturing more anomalies than the conventional classical LSTM model. Among the detected events were what appeared to be quasi-periodic activity from a moment of star explosion or a black hole.

Social Impact of the Research

This study is the world's first application of quantum machine learning to space X-ray observation data and verify its usability. This technology is expected to be a new foundation for efficiently detecting anomalous astronomical phenomena in an era in which time-varying cosmic phenomena will be increasingly focused on. Furthermore, this research breaks into previously unexplored territory by applying quantum computing technology to actual astronomical data and is considered a groundbreaking step toward the full-scale application of quantum information science in astronomy. Furthermore, research into the application of quantum machine learning to astronomical data takes advantage of the diversity and openness of astronomical data, making it easy to devise and test a variety of possible applications. It is expected to produce new technologies that may lead to solutions to actual social problems in the near future.

Notes

The article, “Quantum Machine Learning for Identifying Transient Events in X-Ray Light Curves,” was published in American scientific journal of The Astrophysical Journal (online) at DOI: https://doi.org/10.3847/1538-4357/adda43.