Metaverse laboratory where atoms can be manipulated with “bare hands.” Developing a mixed reality (MR) experimental system using virtual reality and scanning probe microscopy (SPM)

.

Key Findings

- A research group has developed an atomic-level mixed reality experimental system that allows subjects to move smoothly between virtual space and the real world of a laboratory by wearing a special headset.

- Atomic manipulation, which previously required complex operations, can now be performed with just hand gestures, making it possible to "see," "touch" and "move" atoms with intuitive movements like grabbing an actual object.

- The "metaverse laboratory," where researchers from around the world can share the same experiments in a virtual space, is expected to realize a new form of collaborative research at the atomic level and lead to advances in nanotechnology research and science education.

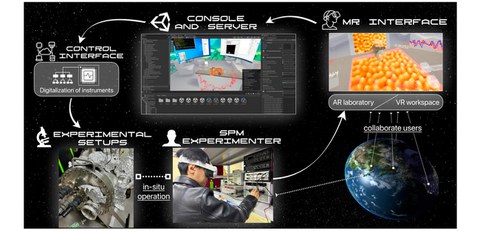

Fig. 1

A metaverse laboratory framework with virtual reality and scanning probe microscopy (SPM).

Credit: Diao, Z., Yamashita, H. & Abe, M. (2025). A metaverse laboratory setup for interactive atom visualization and manipulation with scanning probe microscopy. Scientific Reports, 15, 17490. https://doi.org/10.1038/s41598-025-01578-y. Licensed under CC BY 4.0.

Outlines

A research group led by Assistant Professor Zhuo Diao of the Department of Systems Innovation, Graduate School of Engineering Science, the University of Osaka, and Professor Masayuki Abe of the university-affiliated Center for Science and Technology under Extreme Conditions has developed a mixed reality (MR) experimental system that allows smooth movement between virtual space and the real space of a laboratory, making it possible to intuitively observe and move silicon atoms projected in front of your eyes at 50 million times the magnification (Fig. 1).

In this new experimental system, researchers can seamlessly move between the real world of the laboratory and virtual space by wearing a special headset. The biggest feature is that they can "see," "touch," and "move" atoms with just hand gestures. Atomic manipulation, which previously required complex operations, can now be done with intuitive movements, just like grabbing an actual object. The research team used this system to successfully extract a single atom from a silicon surface. In addition, because this system utilizes metaverse technology, researchers from all over the world can participate in the same experiment from remote locations. It proposes a new form of scientific experimentation that makes atomic-level precision research more accessible and intuitive.

The results of this research were published in the online version of the British scientific journal Scientific Reports on Tuesday, May 20th.

Research Background

The scanning probe microscopy (SPM) is indispensable measurement equipment in the fields of science and engineering, enabling surface observation from the nanoscale to the atomic level. SPM provides an innovative experimental environment that is not available with other equipment, by evaluating the physical properties of single atoms, individual clusters, and local structures (spectroscopy), and to move or remove individual surface atoms (atom manipulation). However, spectroscopy and atom manipulation experiments require advanced technology and precise control, and because they require manipulation on an extremely small scale and are susceptible to vibrations and thermal fluctuations from the environment, research has generally been conducted in limited environments, such as extremely low temperatures or ultra-high vacuum. Furthermore, because they require advanced experimental technology, not everyone was able to conduct experiments.

To date, this research group has constructed highly digitalized SPM equipment, and by using this as a base, it has presented new experimental systems, such as autonomous SPM experiments using AI (https://doi.org/10.1002/smtd.202400813), and SPM experiments using large-scale language models (https://doi.org/10.1088/1361-6501/adbf3a).

Research Contents

The research group has developed an innovative system that integrates virtual reality technology with SPM. The greatest feature of this system is its mixed reality (MR) functionality, which allows subjects to move smoothly between the virtual space and the real space of the laboratory. By simply putting on the special headset, they can intuitively view and move atoms. It is particularly noteworthy that digitalization of advanced SPM instruments has made it possible to automate complex and delicate instrument operations, enabling researchers to manipulate atoms using only their own hand movements. This has made it possible to handle the extremely small world of nanometers with a natural sensation like grabbing an actual object in front of you.

In fact, by using this system, the research group succeeded in targeting and extracting a single atom from a silicon surface at room temperature, paving the way for practical application of advanced atomic manipulation. Delicate atomic manipulation, which was difficult to perform using conventional methods, can now be performed more intuitively and efficiently.

In addition, this system also functions as a "metaverse laboratory," allowing researchers from all over the world to participate in the same experiment from remote locations. This has enabled a new form of collaborative atomic-level precision research that transcends borders. This technology not only accelerates the progress of nanotechnology research, but also opens up new possibilities for science education.

Social Impact of this Research Result

The trend of incorporating MR into measurement and operation systems has the potential to accelerate nanoscale research in three areas: remote, collaborative, and autonomous. First, by creating a digital twin of the equipment in a virtual space, experts from all over the world can participate simultaneously, which is expected to improve equipment operating rates and promote the exchange of knowledge across fields. Secondly, since the MR environment makes it easy to visualize and share operation history and experimental conditions in chronological order, it is believed to be well suited to data-driven AI analysis. If the system learns how to optimize the probe trajectory and detect anomalies, it can be naturally connected to an autonomous experimental system, enabling faster material exploration and equipment tuning. Third, the intuitive interface that integrates visual and tactile feedback is highly effective for education and allows experts to easily transfer their skills to their successors. If new researchers can reach advanced operation in an early stage, it is expected that the competitiveness of the entire research community will be raised. Overall, MR-integrated measurement is expected to provide a trinity growth consisting of liberation of geographical constraints, collaborative optimization with AI, and "improved efficiency in human resource development, and to drive the acceleration of innovation in nano-measurement and nano-operation.

Notes

The article, “A metaverse laboratory setup for interactive atom visualization and manipulation with scanning probe microscopy,” was published in British Journal of Scientific Reports at DOI: https://doi.org/10.1080/23294515.2025.2474928.

Explanatory video: https://www.youtube.com/watch?v=fzmzXuzAWhQ

Links

Technical Glossary

- Head-mounted display (HMD)

Head-mounted display (HMD) is a major device for merging the real world with virtual space. By wearing it on the head, virtual images and information are overlaid on the user's field of vision, providing an experience that is integrated with real space. Representative models include Meta3, Microsoft HoloLens, and Magic Leap, which are equipped with spatial recognition cameras, depth sensors, microphones, speakers, etc. and can detect the user's position, movements, and gaze in real time. In addition, virtual objects can be manipulated using hand gestures and voice commands, allowing for intuitive interaction without using a mouse or keyboard. This has led to its use in a variety of fields, including design, medicine, education, and research. In the future, we expect to see even more natural and immersive experiences as the device becomes lighter, the viewing angle is wider, and it works with AI.

- Mixed reality (MR)

Mixed reality (MR) overlays real and virtual spaces, providing an environment where users can perceive and interact with both spaces simultaneously. A head-mounted display and depth sensors measure the surroundings and precisely anchor holograms to desks and walls, enabling three-dimensional visualization and intuitive manipulation for design reviews, medical training, remote collaboration and more. Multiple people can share and edit the same model via the cloud, and AI can provide guidance according to the situation, improving learning efficiency and safety. In the future, it is expected that the range of applications will expand to collaboration with autonomous robots and smart city management.

- Scanning probe microscopy (SPM)

This equipment observes the surface structure with atomic-level resolution by approaching a sharp needle (probe) to the sample surface and scanning it while detecting interactions. Using tunneling current and atomic forces, it is widely used in research on semiconductors and biomolecules. It features extremely high spatial resolution and is contributing to the development of nanotechnology.