Virtual demolition

Scientists at Osaka University develop an augmented reality algorithm for viewing landscapes with buildings and/or pedestrians digitally removed, which may become standard for urban planning in busy areas with many distractions present

Researchers at Osaka University demonstrated a prototype for real-time augmented reality that can virtually remove both static structures as well as moving objects. This work can be used to visualize future landscapes after urban renovations without interference from passing cars or pedestrians.

Augmented reality, the ability for simulated images to be projected virtually over pictures of actual locations in real time, has been used for both immersive games like Pokémon Go as well as practical applications such as construction planning. As the “augmented” in the name implies, characters or buildings are usually added to scenes, since virtually removing objects to show what lies behind them is a much more computationally intensive task. However, this is exactly what is needed to plan for demolitions, or to see what a landscape would look like after removing distracting moving vehicles or pedestrians from scenes.

Now, researchers at the Division of Sustainable Energy and Environmental Engineering at Osaka University have developed such a system. Their prototype combines deep learning with a video game graphics engine to create real-time landscapes that can virtually remove buildings, trees, or even moving objects, to show the view of the background previously obscured. “Previously, it has not been possible to visually remove moving objects, such as vehicles and pedestrians, from real-time landscape simulations,” corresponding author Tomohiro Fukuda says.

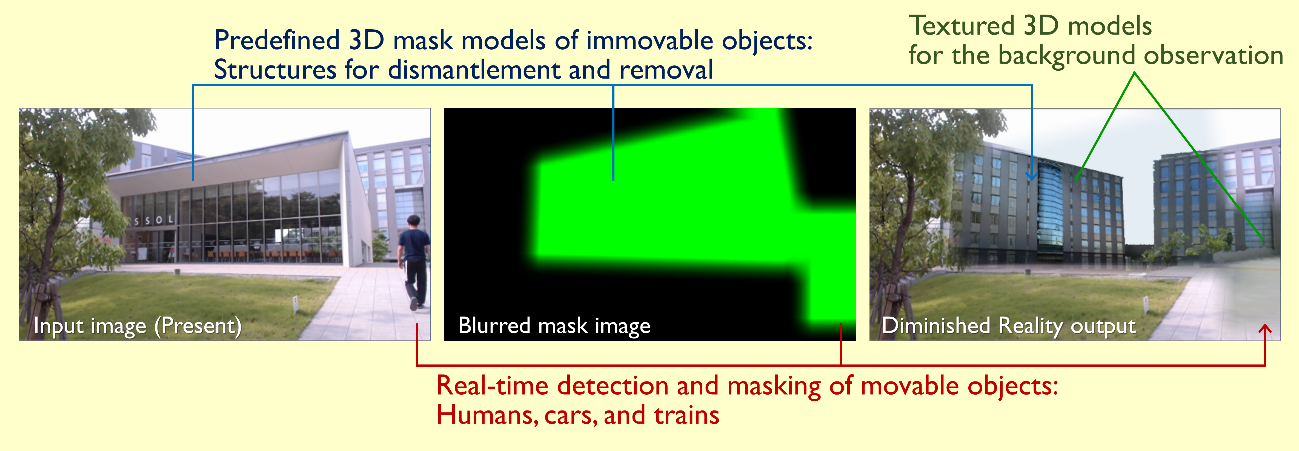

To simulate the removal of large immobile structures, like a building to be demolished, the method dynamically blends photographs of the background behind the building based on the current viewpoint of the camera. For moving objects, like passing cars, a machine-learning algorithm can automatically detect and mask the region they obscure, to be replaced with the appropriate current or future scene. To test the prototype system, the team tried it out on the campus of Osaka University. They found it could automatically detect and remove cars and pedestrians from the image.

“Diminished reality can dynamically remove distractions from our landscapes,” Fukuda says.

This research may be used for planning purposes whenever renovations become necessary.

Fig.1 Proposed “diminished reality” (DR) system. Left: Input image captured by a web camera showing the current situation. Right: DR output image of the future landscape. A moving object (pedestrian) has been virtually removed in real-time along with the immobile building.

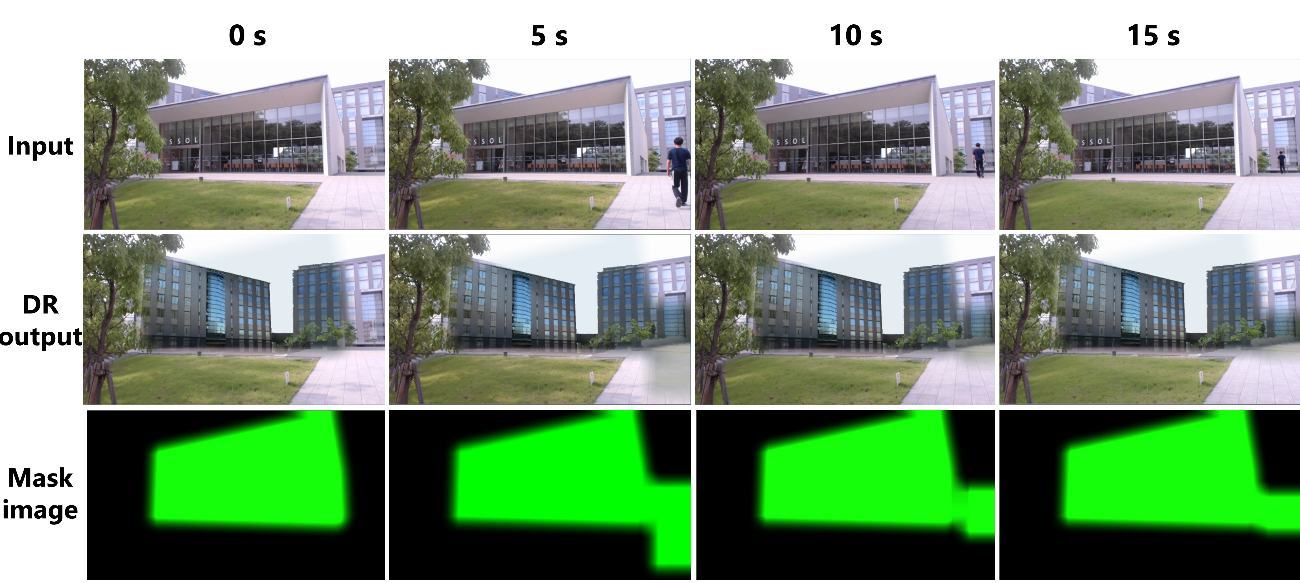

Fig.2 DR timeline (0–15 s). A pedestrian is walking toward the building, but he is always virtually removed.

Fig.3 How the DR system works. In the input video captured by the web camera, moving objects such as the pedestrian are detected by deep learning, and are masked along with immobile objects like the building and virtually removed.

The article, “Diminished reality system with real-time object detection using deep learning for onsite landscape simulation during redevelopment,” was published in Environmental Modelling and Software at DOI: https://doi.org/10.1016/j.envsoft.2020.104759 .