Artificial intelligence (AI) in automatic music composition based on brain waves developed

Will lead to systems for making individuals’ latent potential by music stimuli

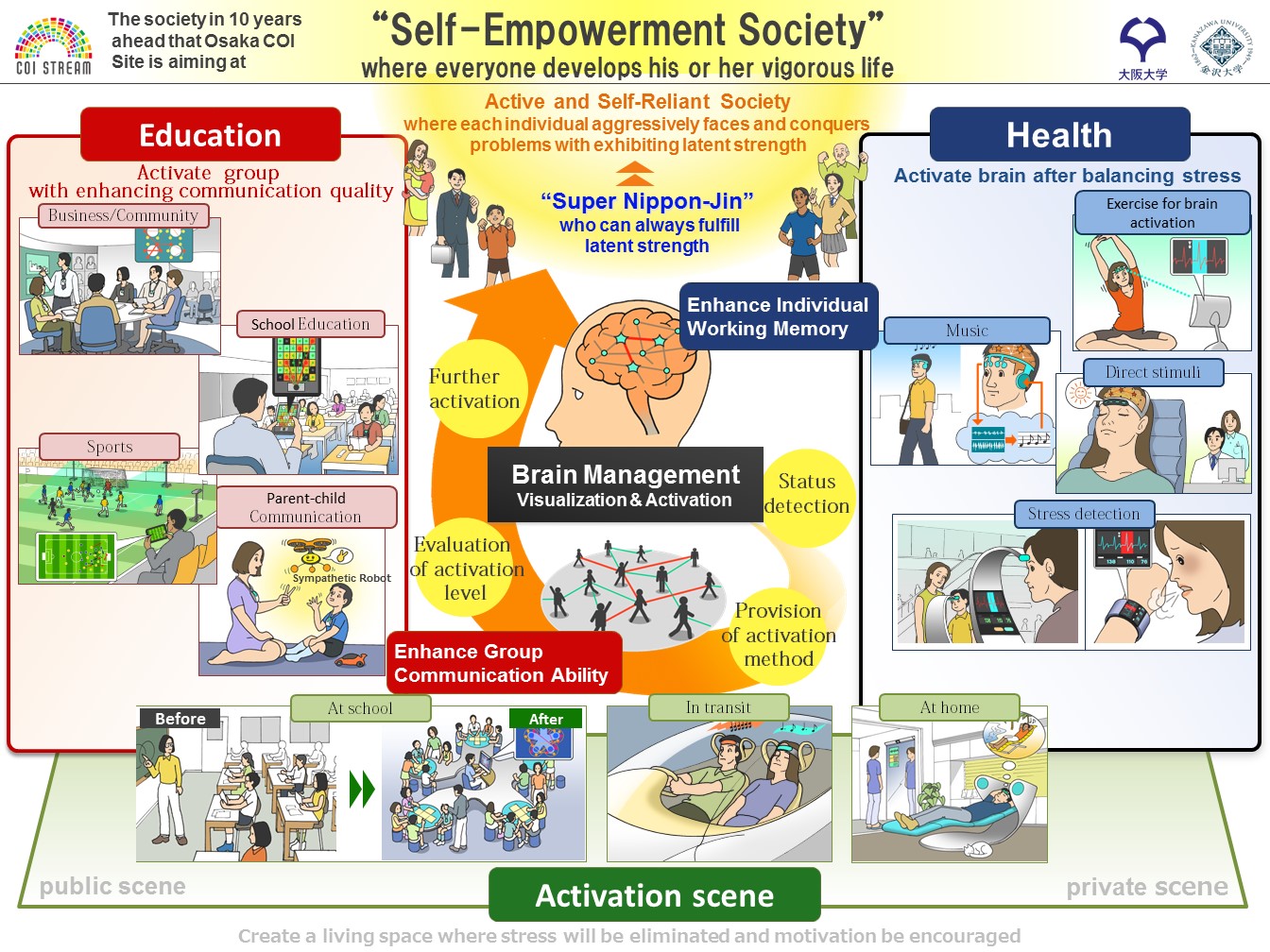

Under the support of the Center of Innovation (COI) program of the Japan Science and Technology Agency (JST), Osaka University’s COI is promoting projects in which researchers in the fields of medicine, brain science, science, and engineering cooperate with industry in an effort to achieve Super Japanese Cultivation.

A group of researchers led by Professor NUMAO Masayuki at The Institute of Scientific and Industrial Research, Professor OTANI Noriko at the Media Studies Department, Tokyo City University, Crimson Technology Co., and IMEC in Belgium, succeeded in developing AI that performs automatic music composition based on brain responses to music.

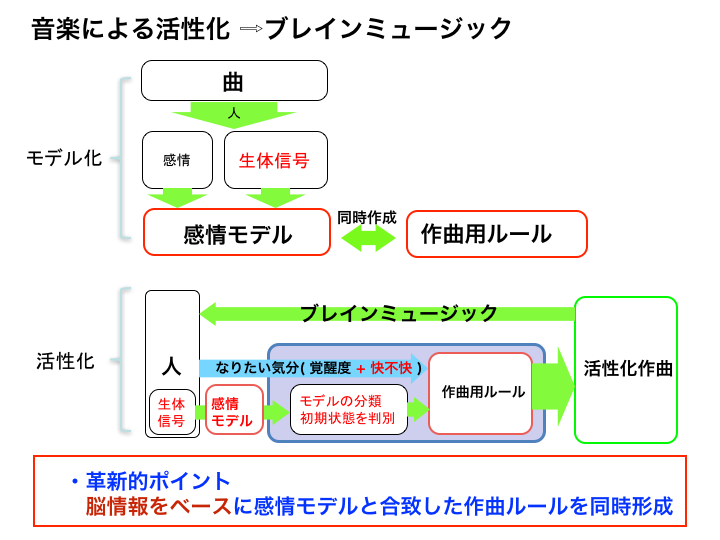

The brain management system in the future will repeat the cycle: it will detect users’ brain state, provide means for activating it, evaluate the state of brain activation, and turn it into its further reactivation. Music is thought to be the prominent means for brain activation; however, conventional music recommendation systems only recommend songs similar to those users listened to before and conventional automatic music composition systems require users to specify characteristics of a song to be composed in detail, both of which were difficult to link to reactivation of the brain.

However, this group developed a brain sensor integrated with headphones, which has made it easy to collect a user’s brain data on music, let the machine to learn the relationship between the users’ response to collected songs and their brain waves, and easily produce unique music that reactivates their mental state. The music created by the machine will be arranged by Musical Instrument Digital Interface (MIDI) technology quickly on the spot and be played in a rich tone using a synthesizer.

This technology will make it possible to measure response of individuals as well as those of the audience in the future. It is hoped that music based on brain waves of people in the audience will be created. In addition, it is expected that a system to let one fully exert one’s potential will be realized in the future by using music stimuli according to the individual states available from their brain waves measured at home.

The device with autonomous music composition software installed was shown during the third Wearable Expo 2017 that was held from Wednesday, January 18 through Friday, January 20, 2017.

Figure 1

Figure 2

Related link